Services / Next.js AI Platform Development

Next.js AI Platform Development With Penetration Testing Built In

Next.js AI platform architect delivering production RAG systems with security and e2e automated browser testing built in. One engineer owns ingestion, retrieval, LLM orchestration, pen testing, and test infrastructure-so your platform ships without surprises.

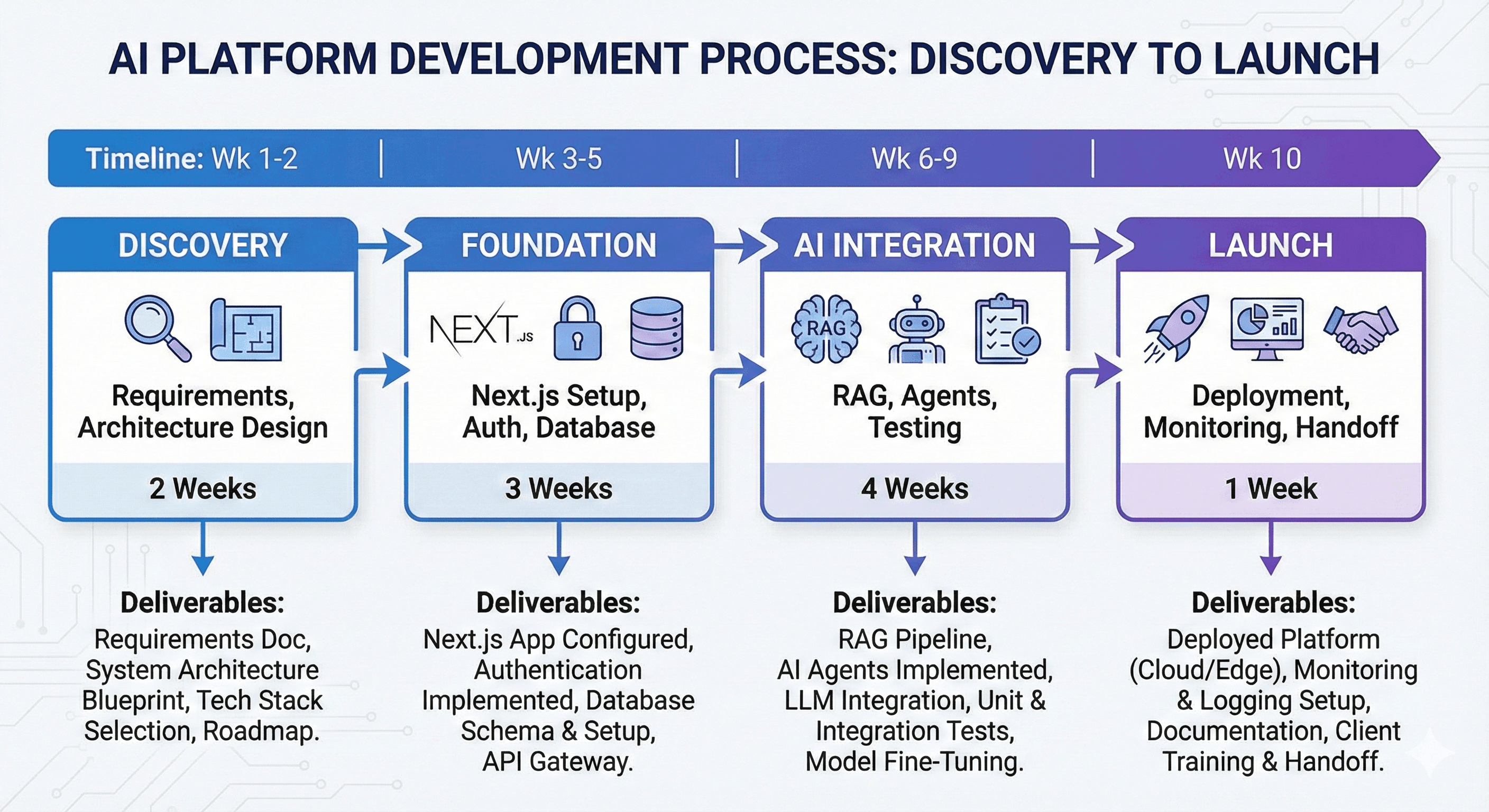

Typical delivery

6-8 weeks · Weekly checkpoints · One engineer owns full stack + security

Timeline includes:

- Weeks 1-2: Discovery, schema design, ingestion scaffolding

- Weeks 3-4: Embeddings, hybrid retrieval, tenant isolation

- Weeks 5-6: LLM orchestration, eval harness, PostHog dashboards

- Weeks 7-8: Pen testing, compliance artifacts, production cutover

Deliverable: Secure, observable, multi-tenant RAG platform with pen test report showing zero critical findings.

Why Next.js for AI platforms?

Next.js combines React's developer experience with production-grade features AI platforms need: streaming responses, edge runtime optimization, and seamless Vercel deployment.

Streaming LLM responses

Server Components and streaming APIs are built for LLM token streaming, giving users real-time feedback without complex WebSocket infrastructure.

Edge runtime optimization

Vercel Edge Functions run AI inference close to users globally, reducing latency for RAG retrieval and LLM calls.

Best-in-class DX

TypeScript, hot reload, and integrated testing (Playwright, Vitest) enable faster iteration when building AI features.

Related services

Next.js frontends pair with these services for complete AI platforms.

Python backends

Data pipelines, FastAPI services, and ML deployment infrastructure to power your Next.js frontend.

Fractional AI Architect

Ongoing technical leadership for teams scaling Next.js AI platforms.

AI agent development

MCP servers and LangGraph workflows built on Next.js/Vercel infrastructure.

What's included

One engineer. Full stack. Zero handoffs.

15 years shipping production platforms with AI and security baked in. I live in the stack from ingestion through identity, billing, observability, and pen testing-then deliver documentation and runbooks so investors and customers see how everything works.

- Hybrid retrieval (pgvector + BM25) tuned for your corpus

- Clerk tenant isolation, RLS, and audit-ready logging

- Next.js 16+, Vercel Edge, Neon/Supabase, Anthropic/OpenAI

- Security testing + compliance artifacts before launch

Need Python backend services?

This Next.js service handles frontends, dashboards, and user-facing UX. For data pipelines, FastAPI services, and ML deployment infrastructure:

View Python AI development services →Why one engineer beats agency teams

No relay race between RAG architect → Next.js dev → security tester. I ship the entire platform then prove it's secure. You stay in sync with weekly working sessions and transparent delivery docs.

Typical engagement model

- • 6-8 weeks for most builds (scales with complexity)

- • Weekly working sessions to review progress

- • Iterative delivery with staging environments from Week 2

- • Security testing baked into every sprint

- • Cancel anytime with 2 weeks notice

How Security-First Platform Development Works

RAG implementation, agent development, multi-tenant architecture, and security integration all happen inside the same sprint. No handoffs between specialists.

RAG Implementation Deliverables

Every engagement includes the components required to harden an AI platform for launch: ingestion, hybrid retrieval, guardrails, monitoring, and security testing. Need deeper help? Bundle with AI security consulting or a fractional AI architect engagement .

Process

How I Build & Secure AI Platforms

No agency relay race. One engineer owns discovery, architecture, development, and AI security testing so timelines don't slip and findings come with fixes.

- 01 - Discovery & audit. Review documents, security questionnaires, and target UX. Establish golden question set.

- 02 - Architecture & ingestion. Stand up pipelines, metadata, and background workers with monitoring.

- 03 - Retrieval & orchestration. Implement hybrid search, reranking, LLM prompts, and prompt-injection guardrails.

- 04 - Hardening & launch. Pen testing, eval harnesses, handover docs, and runbooks mapped to your required controls.

- Row-level security on pgvector tables with tenant context enforcement.

- Prompt injection sanitizers, output validation, and policy filters around tool calls.

- Eval harness with golden questions, citation checks, and drift detection.

- PostHog instrumentation for adoption, latency, and cost alerts.

- Penetration testing + prompt injection testing before launch with documented runbooks.

- Vercel preview deployments for every pull request

- Feature flags (PostHog) for gradual rollouts

- Database migrations with rollback plans

- Blue-green deployments to eliminate downtime

- Monitoring dashboards for adoption, latency, and errors

- Next.js 16+ / ShadCN UI

- Vercel Edge & Node runtimes

- Supabase or Neon with pgvector

- Clerk orgs, SSO/SAML, SCIM

- Stripe Billing + usage ledgers

- PostHog analytics & feature flags

- Inngest / Temporal ingestion queues

- Anthropic Claude / OpenAI GPT orchestration

Testing Infrastructure That Prevents Regressions

Security testing and automated browser testing run together. Playwright e2e tests catch UI regressions, Vitest validates business logic, and penetration testing proves the platform is secure-all before production.

- Cross-browser testing (Chromium, Firefox, WebKit)

- Visual regression detection for UI changes

- API mocking for predictable test environments

- Parallel execution for fast feedback loops

- Instant hot-reload during test development

- TypeScript-native testing without config overhead

- Mocking utilities for external dependencies

- 80%+ code coverage before production

- Adversarial prompt suites test LLM guardrails

- SQL injection and XSS scanners on every deploy

- Dependency vulnerability scanning (npm audit, Snyk)

- Rate limit validation prevents DoS attacks

- Automated Lighthouse audits for performance tracking

- Vercel preview deployments for manual QA

- Slack notifications for test failures

- Rollback automation if production tests fail

Why Automated Testing Matters for AI Platforms

AI platforms break in unpredictable ways. A prompt that worked yesterday might expose PII today. A retrieval query that returned 5 results now returns 500. Automated testing catches these regressions before users see them-and gives you confidence to ship daily instead of quarterly.

Recent builds & outcomes

Proof This Model Works

Selected outcomes from the last few quarters. No fluff-just the metrics founders care about.

Fintech RAG Launch

6 critical vulns patched pre-audit

- Multi-tenant RAG had tenant isolation bug leaking customer queries across accounts-caught during Week 4 pen testing.

- Missing rate limits on inference APIs would have enabled token-spend DoS-fixed before onboarding banks.

- Passed bank security review on the first attempt with zero findings.

- Zero delays to launch timeline-shipped Week 8 on schedule.

Agent Operations Platform

25 workflows live, 0 security regressions

- MCP servers with tool allowlists + audit logging shipped Week 6-sandboxed execution for untrusted tools.

- Credential misuse caught during testing when an agent attempted prod DB access-blocked by the policy engine.

- Pen testing + retests baked into every rollout checkpoint.

- Adoption + cost dashboards ready for Series A investor demos.

Multi-tenant SaaS Modernization

Zero findings on external pen test

- Rails/Next.js upgrade with RAG knowledge base and Stripe usage metering.

- 800+ automated tests + continuous eval harnesses caught regressions before production.

- External penetration test (required by enterprise customers) closed with zero findings.

- Support backlog dropped 40% once AI summaries + regression prevention landed.

FAQ

Common Questions

Honest answers to the questions founders ask about RAG implementation, platform architecture, and security testing.

What do engagements include?

Every build covers ingestion, embeddings, hybrid retrieval, LLM orchestration, observability, billing, and security testing. You get architecture, code, eval harnesses, penetration testing evidence, and runbooks-not just a demo.

Can you handle both development and security testing?

Yes. I scope, build, and harden the RAG pipeline while running prompt injection testing and penetration testing. You avoid juggling separate vendors for development, RAG implementation, and security consulting.

How fast can you deliver production systems?

Most builds run 6-8 weeks depending on integrations. Weeks 1-2 cover discovery and ingestion, Weeks 3-4 nail retrieval + identity/billing, Weeks 5-6 finalize LLM orchestration, evals, and pen testing. Larger scopes extend but stay iterative.

What testing coverage do you provide?

Every platform ships with Playwright e2e tests for critical flows, Vitest suites for business logic, and automated security testing (OWASP ZAP, Nuclei, prompt injection suites). We target 80%+ code coverage and zero critical findings before launch.

Do you only work with Bay Area teams?

I partner with Bay Area founders in person when it helps, but most engagements are remote-friendly. Slack, Loom, and weekly working sessions keep teams in sync regardless of timezone.

AI Platform Architecture Resources

These guides walk through platform architecture, hybrid search, and pipeline design in more detail-and each article links back to the security-first build process described here.

Ready to ship your secure AI platform?

Whether you need an MVP, platform scale-out, or architecture review, you work directly with an AI architect who builds and secures the entire stack.

See how I design retrieval layers in my complete guide to Retrieval-Augmented Generation.

Serving companies across the San Francisco Bay Area, Silicon Valley, and remote teams worldwide.